SInnoPSis Newsletter #9

Origin Story - ROB ME - A Critical Perspective on Effect Size - UK Recruitment Reform - Over 1 Billion - A Difficult Time for the French Doctor - Tell Me How to Wash My Hands

Origin Story

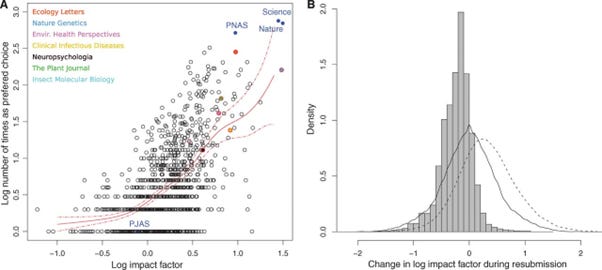

A study looked at the submission process of studies published in 923 scientific journals from biological sciences in the years from 2006 to 2008. About 75% of published articles were initially targeted at the journal where they were accepted eventually. Surprisingly, the fraction of first-intent publications decreased in the impact factor of the journal. When looking at the submissions the number of times a journal is chosen as the first target increases with the impact factor, but when we look at the published papers, the trend is surprisingly decreasing.

Many accepted papers in PNAS and even some in Science and Nature were first rejected elsewhere.

This is an important finding for meta-science and citation analysis, as some authors suppose that re-submissions increase the quality of the paper, and might try to measure it by the number of citations.

https://www.science.org/doi/10.1126/science.1227833

ROB-ME

A new tool, ROB-ME, is now available for assessing the risk of bias due to missing evidence in systematic reviews with meta-analysis. The structured approach focuses on situations when entire studies, or particular results within studies, are missing from a meta-study because of their p-value, magnitude, or direction of the result. These factors affect whether a finding is published at all, where it is published, and how long is the publishing process.

https://www.bmj.com/content/383/bmj-2023-076754

A Critical Perspective on Effect Size

What is the problem with the following test: F(1.58)=81.36p<.001, η²=.558 ?

The number of participants might be sufficient, the p-value indicated a significant effect, and the η² indicates that the predictor explains 55.8% of the variance. That’s a good result that could be easily published.

Yes, but it is actually too good to be true.

“Some effect sizes are simply too large. I would be extremely distrustful of someone who told me that their dad is 20ft tall, no matter how many pictures they provide, because it contradicts everything that we know about human size. […] A contextual manipulation (whatever that is) cannot explain more than 50% of the variance in a phenomenon as multiply determined as Willingness to Pay. If an effect this large existed, Plato or Aristotle would have written about it, and companies all around the world would rely on it everyday: It wouldn’t be a “novel finding” in a marketing journal. Even manipulation checks typically have smaller effect sizes.” - explains Quentin André.

His article provides even more insightful information on what to think about effect sizes:

https://quentinandre.net/post/a-critical-perspective-on-effect-sizes/

UK Recruitment Reform

The United Kingdom Reproducibility Network (UKRN) has formally announced the inauguration of one of the most extensive global efforts aimed at transforming the recognition and appreciation of open research during recruitment, promotion, and evaluation processes for researchers. Named "OR4”, the program has listed 43 distinguished UK-based academic research institutions that have opted to participate in, either as case studies or as members of a broader community of practice.

https://www.ukrn.org/2023/11/20/43-uk-institutions-reforming-recruitment-and-promotion/

Over 1 Billion

It has been estimated that the scientific community paid the five scholarly publishing enterprises the amount of USD 1.06 billion over a span of four years, using public resources. Notably, this figure solely encompasses the costs associated with disseminating open-access research publications. According to a calculation from Stefanie Haustein’s team, Springer Nature earned the most money with $589.7 million, followed by Elsevier ($221.4 million), Wiley ($114.3 million), Taylor & Francis ($76.8 million), and Sage ($31.6 million), mostly through APCs (article processing charges). If we transform it to a price per study, it leads to a sum of $2,500. Scientific Reports and Nature Communications, two of the most prominent journals, accounted for a big part of this income, with $105.1 million and $71.1 million, respectively. Detailing these results, Scientific Reports published almost 22,000 articles a year, charging $2,490 for each one of them, while Nature Communications published about 7,500 articles per year for a price of $6,490 per article.

Words have been said by researchers. French sociologist Pierre Bataille refers to the publishers’ charges as “research vampirization.” Stefanie Haustein used the term “obscene” to describe the profit margin of the main publishers.

For example, the Dutch giant Elsevier has an annual income of $3.5 billion, with $1.3 billion in profit, according to its 2022 accounts. “This means that for every $1,000 that the academic community spends on publishing in Elsevier, about $400 go into the pockets of its shareholders,” Haustein explains.

A Difficult Time for the French Doctor

A collective of investigators has found 456 medical experiments conducted by the Institut Hospitalo-Universitaire - Méditerranée Infection (IHU-MI), "with serious concerns about their adherence to ethical standards."Specifically, these experts observed that approximately 250 of the studies utilized an identical reference number for ethics approvals, despite exhibiting considerable disparities in experimental design. Among those studies implicated in potential ethical breaches, a notable subset of 135 cases was found to be featured in the journal New Microbes and New Infections.

As Lonni Besançon, the whistleblower, stated: Publishers don't care about integrity.

Tell Me How to Wash My Hands

During the COVID-19 pandemic, researchers asked participants to recall and estimate the frequency of their routine behaviors, such as the number of times they washed their hands or sanitized them, and how many times they were around another person within 2 meters for more than 2 minutes the prior day.

Before asking them these questions, researchers asked participants to identify if the frequency of their own behaviour was over, under, or equal to a benchmark value, or “anchor” point.

Participants with the “low” anchor reported significantly lower to those with the “high” anchor. For example, 86% of participants in the latter group, but only 52% in the former said that they washed their hands more than 10 times a day. That is to say that self-reported behaviours are highly subject to contextual influence.

Researchers indicate that routine behaviour should be observed in its natural context.